easytranscriber: Speech recognition with precise timestamps

easytranscriber is an automatic speech recognition library for transcription with precise word-level timestamps. It is 35% to 102% faster compared to WhisperX, by leveraging…

A datalab at the National Library of Sweden

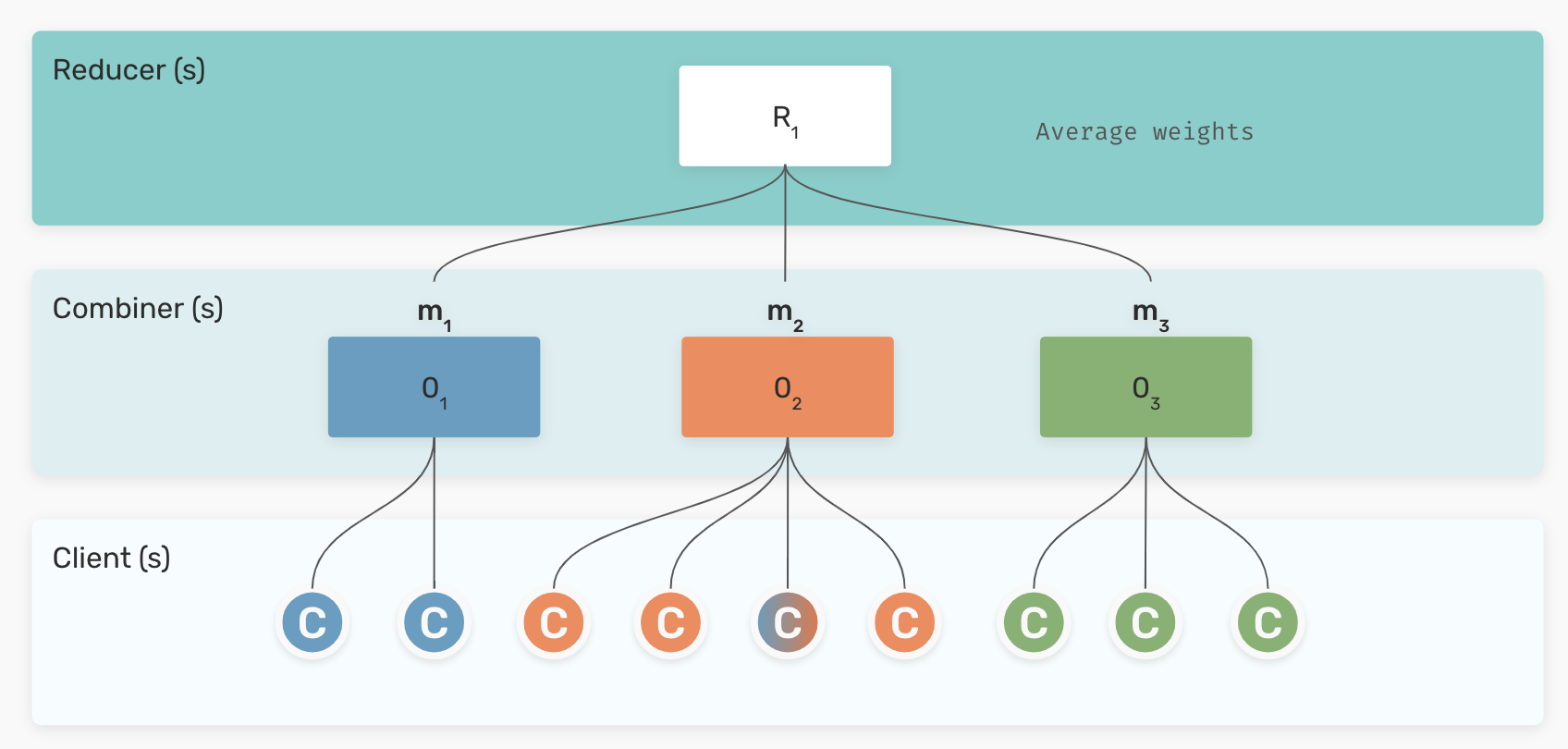

We trained a bilingual Swedish-Norwegian ELECTRA language model in a federated setup, showcasing LM training when various…